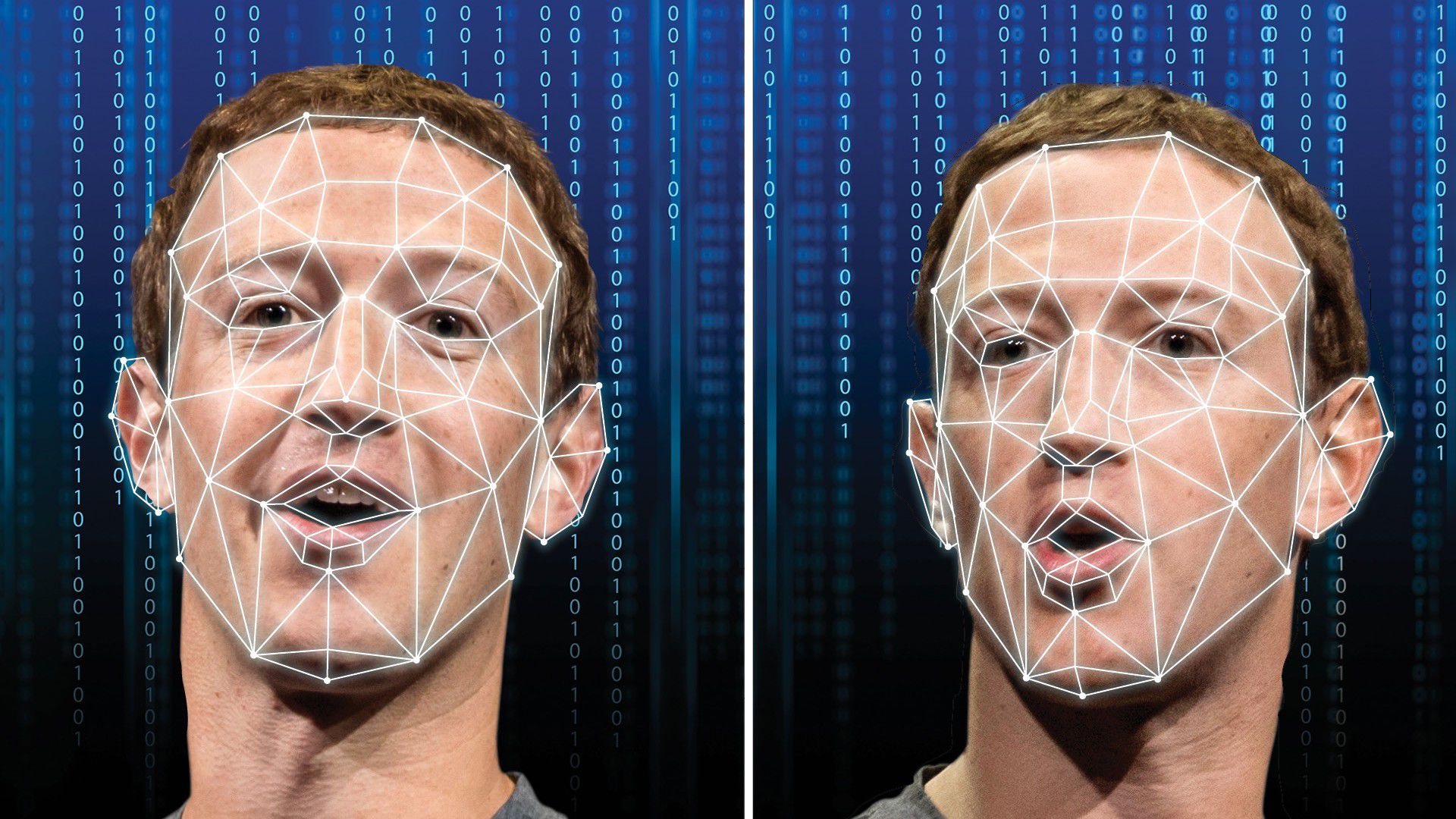

According to Reuters, Facebook recently announced its new policy toward manipulated content with a focus on deepfake videos; those generated using AI tools.

Facebook announced that it intends to remove “misleading manipulated media” only in case it meets the following criteria as stated in their own words:

- It has been edited or synthesized – beyond adjustments for clarity or quality – in ways that aren’t apparent to an average person and would likely mislead someone into thinking that a subject of the video said words that they did not actually say. And:

- It is the product of artificial intelligence or machine learning that merges, replaces or superimposes content onto a video, making it appear to be authentic.

The social media giant stated on their blog that through this step they are “strengthening [their] policy toward misleading manipulated videos that have been identified as deepfakes”. However, Facebook told Reuters that it does not intend to remove videos that were altered using low-tech methods. The most recent example is the edited video of Nancy Pelosi, the American Democratic Party politician known for openly criticizing Trump. The original footage was edited to make Pelosi appear “drunk or unwell”, with the altered version achieving high viewership and shared by opposing politicians on social media platforms. In reaction, Facebook deems the measures it took in that matter enough; decreasing its distribution and attaching a warning that it is false but not removing it since it has not been altered using AI.

Politicians have recently repeatedly criticized Facebook for its policies toward disinformation. In its defence, in addition to announcing its new policy, Facebook highlighted its collaboration with Reuters in launching a “free online training course” under the title “Identifying and Tackling Manipulated Media”, in four languages: English, Spanish, French and Arabic. The course is an attempt to contribute to raising awareness and helping newsrooms, who in many instances depend on content sent by third parties, to identify deepfakes and altered media.

It is worth mentioning that deepfakes are created using “two AI algorithms which work together in something called “a generative adversarial network”, or Gan”. One of the two algorithms detects fake videos (the discriminator) through learning from data that helps it detect fake videos. While the other algorithm (the generator) uses the “same dataset” to create videos that do not look like the fake ones. Unfortunately, despite not being perfect until this moment, such tools are potent and improving, which will make it more difficult to detect deepfakes if necessary measures are not taken.